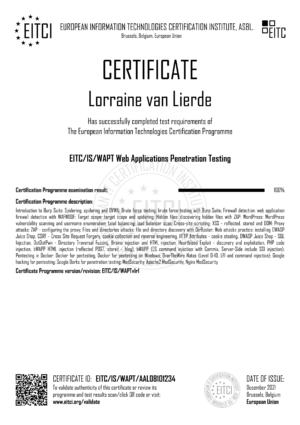

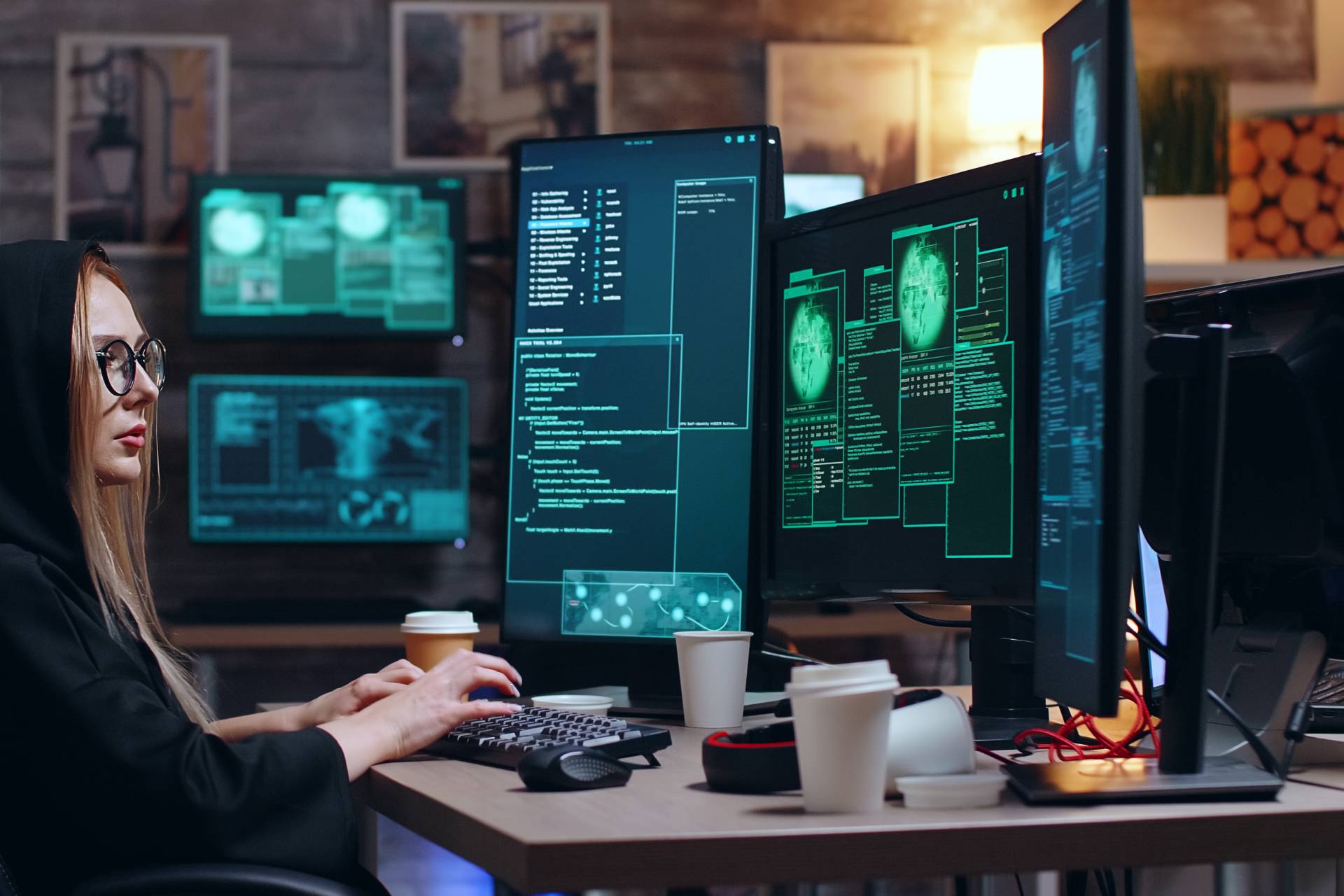

Fully online and internationally accessible European IT Security Certification Academy from Brussels EU, governed by the European Information Technologies Certification Institute - a standard in attesting cybersecurity digital skills.

The main mission of the EITCA Academy is to disseminate worldwide the EU based, internationally recognized, formal competencies certification standard, that can be easily accessed online supporting the European and global Information Society and bridging the digital skills gap.

The standard is based on the comprehensive EITCA/IS Academy programme including 12 relevant individual EITC Certificates. The EITCA Academy enrolment fees cover the full cost of all included EITC programmes, providing participants with all corresponding EITCA Academy constituent EITC Certificates, along with a relevant EITCA Academy Certificate. The programme is implemented online.The EITCA/IS Academy fee is € 1100, but due to the EITCI subsidy this fee can be reduced by 80% (i.e. from € 1100 to € 220) for all the participants (regardless of their country of residence and nationality) in support of the European Commission Digital Skills and Jobs Coalition.

The EITCA/IS Academy fee includes Certification, selected reference educational materials and didactic consultancy.

How it worksin 3 simple steps

(after you choose your EITCA Academy or a selected range of EITC certificates out of the full EITCA/EITC catalogue)

ENROL IN EITCA/IS ACADEMY +Learn & practice

Follow online referenced video materials preparing for exams. There are no fixed classes, you study on your schedule. No time limits. Expert online consultancies.

Get EITCA Certified

After preparing you take fully online EITC exams. After passing all EITC exams you obtain your EITCA Academy Certificate. Unlimited retakes at no further fees.

Launch your career

EITCA/IS Academy Certification with its 12 constituent European IT Certificates (EITC) will formally attest your expertise in cyber security. Certify your professional skills.

Learn & practice online

Follow online referenced video materials preparing for exams. There are no fixed classes, you study on your schedule. No time limits. Expert consultancies included.

- EITCA Academy groups EITC programmes

- Each EITC programme is ca. 15 hours long

- Each EITC programme defines a scope of online EITC exam with unlimited retakes

- Access EITCA Academy platform with 24/7 curriculum reference didactic materials and consultancies with no time limits

- You also gain access to required software

Get EITCA Certified

After preparing you take online EITC exams (with unlimited exam retakes at no additional fees) and you are issued your EITCA Certification & 12 EITC Certificates.

- EITCA/IS IT Security Academy Certificate attesting your cybersecurity expertise

- Detailed EITCA/IS Diploma Supplement

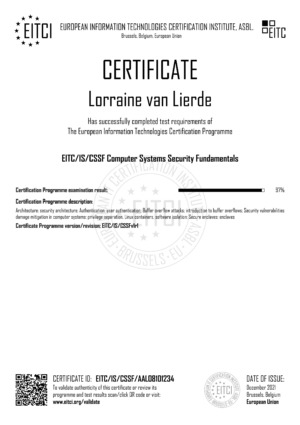

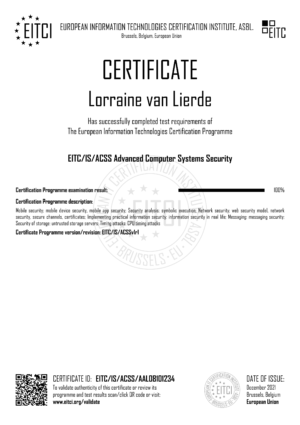

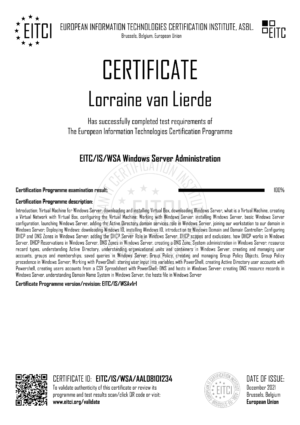

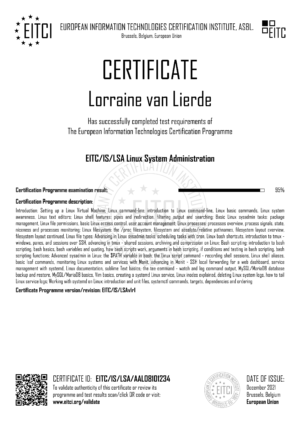

- 12 constituent EITC Certificates in IS

- All documents issued and confirmed in Brussels upon a fully online procedure

- All documents issued in a permament digital form with e-validation services

Launch your career

The EITCA Academy Certificate with a supplement and its constituent EITC Certificates attest your expertise.

- Include it and showcase in your CV

- Present it to your contractor or employer

- Prove your professional advancement

- Show your activity in international education and self-development

- Find your desired job position, get promotion or find new contracts

- Join the EITCI Cloud community

EITCA/IS INFORMATION SECURITY ACADEMY

Cryptography - Networks Security - Pentesting

- Professional European Cybersecurity Certification programme implemented remotely online with selected highest quality reference learning materials covering the curriculum in a step-by-step didactic process

- Comprehensive programme including 12 EITC Certifications (ca. 180 hours) with access to software, expert consultations, unlimited exams retakes at no additional fees, to be completed even in 1 month, but without any time limits

- Examination and certification procedures implemented fully online with EITCA IT Security Certificates issued in Brussels in a validable digital form with a permament access to the certification curriculum and online platforms

- Fully self-contained programme covering foundations, suitable for beginners as well (no need for prior experience with Cybersecurity), quickly focusing on practical aspects of IT Security to formally attest professional skills in this field

All of the below EITC certification programmes are included in the EITCA/IS Information Security Academy

EITCI Institute

The EITCA Academy constitutes a series of topically related EITC Certification programmes, which can be completed separately, corresponding on their own to standards of industrial level professional IT skills attestation. Both EITCA and EITC Certifications constitute an important confirmation of the holder's relevant IT expertise & skills, empowering individuals worldwide by certifying their competencies and supporting their careers. The European IT Certification standard developed by EITCI Institute since 2008 aims to support digital literacy, disseminate professional IT competencies in life long learning and counter digital exclusion by supporting people living with disabilities, as well as people of low socio-economic status and the pre-tertiary school youth. This conforms with the guidelines of the Digital Agenda for Europe policy as set out in its pilar of promoting digital literacy, skills and inclusion.

-

EITCA/IS INFORMATION SECURITY ACADEMY

-

€ 1,100.00

- EITCA/IS Certificate & 12 EITC Certificates

- 180 hours (may be completed in 1 month)

- Learning & exams:Online, on your schedule

- Consultations:Unlimited, on-line

- Exam retakes:Unlimited, free of charge

- Access:Instant with all necessary software trials

- 80% EITCI subsidy, no time limit to finish

- One-time fee for the EITCA/IS Academy

- 30 days full money back guarantee

-

Single EITC Certificate

-

€ 110.00

- Single chosen EITC Certificate

- 15 hours (may be completed in 2 days)

- Learning & exams:Online, on your schedule

- Consultations:Unlimited, on-line

- Exam retakes:Unlimited, free of charge

- Access:Instant with all necessary software trials

- No EITCI subsidy, no time limit to finish

- One-time fee for chosen EITC Certification(s)

- 30 days full money back guarantee

LORENZO

CHLOE

PETER

1000+

100+

1 000 000+

50 000 000+

50 000+

EITCI DSJC Subsidy code waives 80% of the fees for EITCA Academy Certifications within a limited number of places. The subsidy code has been applied to your session automatically and you can proceed with your chosen EITCA Academy Certification order. However if you prefer to not lose the code and save it for later use (before the deadline) you can send it to your e-mail address. Please note that the EITCI DSJC Subsidy is valid only within its eligibility period, i.e. until the end of . The EITCI DSJC subsidized places for EITCA Academy Certification Programmes apply to all participants worldwide. Learn more on the EITCI DSJC Pledge.SEND EITCI SUBSIDY CODE TO YOUR EMAIL